Popular Tools by VOCSO

In today’s data driven world, web scraping is crucial for gathering insights, monitoring competitors, or extracting critical data from websites. Whether you’re a business owner, researcher, or developer, the ability to collect data from websites efficiently is crucial. The right web scraping tool can help you save time, effort, automate repetitive tasks etc. for best data analysis.

We’ll explore 20 of the top data scraping tools for web, each offering different features to meet your Web Scraping Services needs. Whether you need an easy to use platform with no coding required or a powerful framework for handling large-scale scraping projects, you’ll find an option here to suit your project’s requirements.

Table of Contents

Octoparse

Octoparse is one of the leading web scraping tools, primarily designed for users who want to collect data without any programming experience. With its no-code interface, Octoparse allows users to quickly extract data by simply pointing and clicking on the elements they want to scrape. The visual workflow interface makes it easy to create and customize scraping tasks, and it supports data extraction from both static and dynamic web applications.

Octoparse Key Features:

No-Code Interface: Ideal for users with no coding experience, Octoparse’s intuitive interface lets you select elements from a webpage to scrape, making it accessible for everyone.

Advanced Workflow Automation: Users can schedule scraping tasks to run at regular intervals, ensuring fresh data is gathered automatically.

Cloud-based Storage: Octoparse offers cloud-based data storage, allowing you to save and export your scraped data securely.

Octoparse Best Use Cases:

- For users who need a simple, no-code solution for data scraping.

- Businesses that require automatic data collection on a regular basis without needing a technical background.

ParseHub

ParseHub is another powerful web scraping tool that allows users to extract data from complex websites, including those with dynamic content powered by JavaScript. With its point-and-click interface, ParseHub enables users to build sophisticated scraping tasks without writing a single line of code. The tool can handle both static and dynamic sites, making it versatile for a wide range of scraping applications.

ParseHub Key Features:

JavaScript Handling: ParseHub’s ability to handle websites with dynamic content generated by JavaScript makes it a great choice for scraping data from complex sites like e-commerce stores or social media platforms.

Advanced XPath Support: ParseHub uses XPath, a query language that allows users to define precise data extraction rules, making it perfect for users who need more control over their scraping tasks.

Cloud and Local Extraction: You can run ParseHub both locally and in the cloud, offering flexibility based on your needs.

ParseHub Use Cases:

- Scraping complex websites that use JavaScript for content rendering.

- Businesses needing to gather data from dynamic sources such as e-commerce websites or news portals.

Mozenda

Mozenda is a professional-grade web scraping platform designed for both businesses and individuals who need to extract data at scale. It offers a no-code interface, combined with powerful features for data collection, cleaning, and storage. Mozenda’s cloud-based service is scalable and customizable, making it an ideal choice for enterprise-level projects.

Mozenda Key Features:

Cloud-Based Scraping: Mozenda offers cloud-based scraping with automatic updates and data storage, enabling users to easily manage large-scale data extraction.

Data Organization: It provides powerful tools for organizing, filtering, and cleaning scraped data, ensuring that the extracted information is structured and ready for analysis.

Advanced Automation: Mozenda supports automated scraping workflows, allowing businesses to set up recurring scraping tasks to gather data regularly.

Mozenda Use Cases:

- Enterprises that need to scale their web scraping efforts and handle large volumes of data.

- Data-driven businesses looking for an automated way to collect and organize data for analysis.

WebHarvy

WebHarvy is a point-and-click web scraping tool designed for users who need to collect data from visually appealing websites. Its visual interface is perfect for scraping data from product listings, images, and even video content. WebHarvy is ideal for users who require an intuitive and efficient way to collect data from image-rich websites.

WebHarvy Key Features:

Point-and-Click Interface: WebHarvy’s visual interface allows users to click on the elements they want to scrape, which simplifies the data extraction process.

Automatic Pattern Detection: WebHarvy can automatically detect patterns on websites and suggest scraping tasks accordingly, making it user-friendly.

Image Scraping: Unlike many other tools, WebHarvy supports image scraping, allowing users to download images alongside other data points.

WebHarvy Use Cases:

- Collecting data from product listings and e-commerce websites, particularly when images are involved.

- Web scraping tasks that involve data extraction from visually rich content like galleries or multimedia platforms.

Dexi.io

Dexi.io is a cloud-based web scraping tool that combines scraping, data storage, and automation in one platform. It provides users with a powerful browser-based interface for scraping both simple and dynamic websites. Dexi.io is well-suited for businesses looking for advanced automation to scale their scraping efforts.

Dexi.io Key Features:

Cloud-Based Automation: With Dexi.io, all scraping operations run in the cloud, offering flexibility and scalability for large-scale data collection projects.

Real-Time Data: The platform offers real-time data extraction, ensuring that users get the most up-to-date information available on the web.

Customizable Scraping Workflows: Dexi.io provides users with the ability to set up custom workflows, including data extraction, data cleaning, and even scheduling tasks.

Dexi.io Use Cases:

- Large-scale web scraping projects that need real-time updates.

- Businesses that require automated data collection and processing in the cloud.

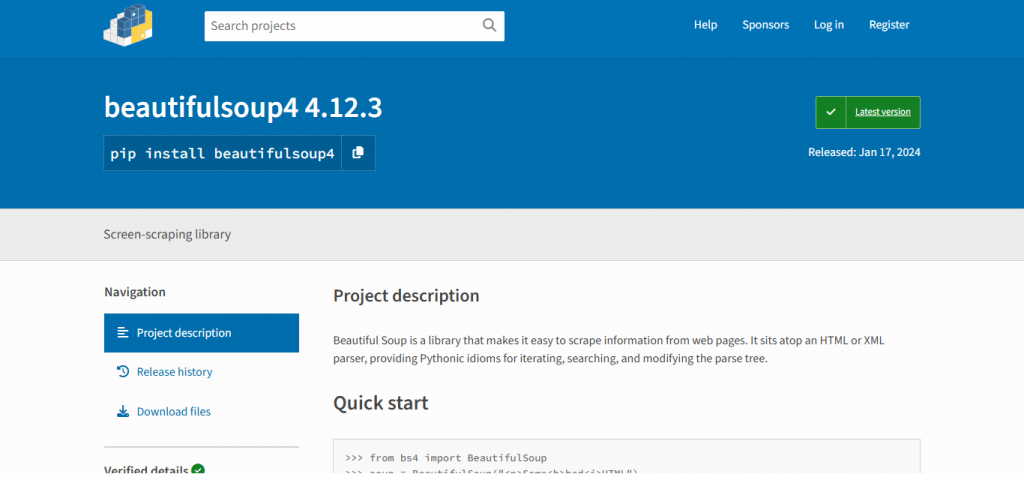

Beautiful Soup

Beautiful Soup is a popular Python library used for web scraping purposes. Unlike other tools with graphical interfaces, Beautiful Soup requires some coding knowledge. It is ideal for developers who need to parse HTML and XML documents to extract data programmatically. Beautiful Soup integrates seamlessly with Python’s requests library to fetch webpage content, which makes it a highly flexible and powerful tool for data extraction.

Beautiful Soup Key Features:

Flexible Parsing: Beautiful Soup allows users to parse HTML and XML documents and extract data using various methods, including CSS selectors and XPath.

Integration with Python Libraries: It integrates well with other Python libraries such as requests and pandas, enabling more advanced data analysis workflows.

Simple Syntax: Despite being a coding-based tool, Beautiful Soup is known for its simple and easy-to-understand syntax, making it beginner-friendly for Python developers.

Beautiful Soup Use Cases:

- Developers who prefer working with Python and need a tool for web scraping that integrates with other Python libraries.

- Custom web scraping projects that require flexibility and advanced data manipulation.

Scrapy

Scrapy is an open-source web scraping framework built for Python developers. It is highly versatile and supports large-scale web scraping projects. Scrapy allows developers to create custom spiders that can navigate websites, scrape data, and save it in structured formats. It’s ideal for developers who need a powerful and scalable solution for data collection.

Scrapy Key Features:

High Performance: Scrapy is designed for speed and performance, making it perfect for scraping large websites or crawling multiple sites simultaneously.

Built-in Data Export Options: It allows you to save scraped data in various formats, including JSON, CSV, and XML, making it easy to integrate with other data processing tools.

Advanced Features: Scrapy offers advanced features like request scheduling, handling cookies, and integrating with databases.

Scrapy Use Cases:

- Developers who need a scalable solution for large-scale web scraping tasks.

- Custom projects that require fine-grained control over the scraping process.

Selenium

Selenium is a robust and popular framework for automating web browsers, widely used for both web scraping and web testing. It allows users to simulate human interactions with web pages, which is particularly useful when dealing with dynamic websites that require actions like clicking buttons, filling out forms, or handling JavaScript rendering.

Selenium Key Features:

Browser Automation: Selenium can automate browsers like Chrome, Firefox, and Internet Explorer, simulating user actions such as clicks, scrolling, and data input.

Cross-Browser Testing: It supports multiple browsers eg. chrome, firefox, safari etc , making it ideal for scraping websites that perform differently across platforms.

Integration with Python and Other Languages: Selenium works seamlessly with Python, Java, and other programming languages, giving developers flexibility in their coding approach.

Selenium Use Cases:

- Web scraping projects that require interaction with dynamic websites (e.g., logging in, clicking on elements).

- Developers who need a powerful automation tool for both web testing and data extraction.

Puppeteer

Puppeteer is another powerful web scraping tool, specifically designed for interacting with web pages via a headless version of Chrome. It’s a NodeJS library that gives developers full control over browser interactions, such as navigating, taking screenshots, and scraping data from websites that heavily rely on JavaScript.

Puppeteer Key Features:

Headless Browser Control: Puppeteer operates with a headless version of Chrome, meaning it runs without a graphical interface, speeding up web scraping tasks.

Automated Browsing: It allows users to automate tasks like clicking, scrolling, and filling forms, making it an excellent choice for scraping dynamic websites.

Extensive API: Puppeteer provides a rich API that gives developers full control over browser operations, making it perfect for complex scraping tasks.

Puppeteer Use Cases:

- Scraping modern websites that rely on JavaScript or require interactions like clicking or scrolling.

- Developers looking for a robust and flexible tool for scraping and browser automation.

Cheerio

Cheerio is a fast, flexible, and lightweight web scraping library built for NodeJS. It implements a subset of jQuery’s core functionality and is highly effective at parsing HTML and XML. Cheerio is widely used by developers who need a fast, server-side solution for scraping data without the overhead of browser automation.

Cheerio Key Features:

jQuery Syntax: Cheerio mimics jQuery, making it familiar and easy to use for developers already acquainted with jQuery for frontend web development.

Lightweight and Fast: Cheerio’s ability to parse and manipulate HTML content quickly makes it perfect for extracting data from static websites or those that don’t require JavaScript rendering.

Server-Side Solution: Since it doesn’t rely on browser automation, Cheerio is lightweight and can handle scraping tasks with minimal resources.

Cheerio Use Cases:

- Developers who want to scrape data from static websites without the need for a full browser.

- Projects that require quick, efficient data extraction using server-side resources.

Scraper API

Scraper API is a web scraping service that automates proxy management, CAPTCHA solving, and other anti-scraping mechanisms. It allows users to scrape websites with ease by rotating proxies and bypassing obstacles such as CAPTCHAs or JavaScript challenges, all without having to worry about infrastructure management.

Scraper API Key Features:

Automatic Proxy Rotation: Scraper API handles proxy rotation to prevent IP blocking, ensuring uninterrupted scraping across multiple websites.

CAPTCHA Solving: It automatically solves CAPTCHAs, allowing you to scrape data from sites that require this additional security measure.

Easy API Integration: Scraper API can be easily integrated with any web scraping framework or tool, making it a versatile addition to your scraping toolkit.

Scraper API Use Cases:

- Scraping large-scale websites that have aggressive anti-scraping measures, such as CAPTCHA or IP blocking.

- Users who need a hands-off solution for managing proxies, solving CAPTCHAs, and maintaining anonymity.

ScrapingBee

ScrapingBee is an API that handles JavaScript rendering and proxy management, making it ideal for scraping websites that rely heavily on JavaScript for content generation. It allows users to fetch web pages as if they were being viewed by a real browser, ensuring that dynamic content is fully loaded before data is extracted.

ScrapingBee Key Features:

JavaScript Rendering: ScrapingBee renders JavaScript-heavy pages so that users can scrape the content exactly as it appears in a browser.

Proxy Management: It includes automatic proxy rotation and IP address management to avoid blocking and ensure continuous scraping.

API Integration: ScrapingBee is a simple API that integrates with your scraping setup and provides reliable data delivery.

ScrapingBee Use Cases:

- Scraping JavaScript-heavy websites that require content to be fully rendered before data extraction.

- Users who need an API-based solution that abstracts away complex technical details.

ZenScrape

ZenScrape is another scraping tool that focuses on automating the proxy rotation and data extraction process. Its key selling point is its scalability, making it ideal for large-scale scraping operations. With ZenScrape, users can extract data from a wide range of websites without worrying about IP blocks or rate limits.

ZenScrape Key Features:

IP Rotation and Proxy Management: ZenScrape includes a proxy pool to ensure your scraping tasks remain undetected by websites.

Simple API Integration: The tool provides an easy-to-use API for integrating web scraping into custom applications.

High Scalability: ZenScrape can scale to meet the demands of large scraping projects, handling significant volumes of data efficiently.

ZenScrape Use Cases:

- Large-scale data scraping projects that require reliable proxy management.

- Businesses needing to collect vast amounts of data from websites without encountering rate limits.

Diffbot

Diffbot is an advanced web scraping tool that leverages artificial intelligence (AI) to analyze and extract data from websites. Unlike traditional scraping tools, Diffbot uses machine learning to identify and extract relevant content from web pages, making it a powerful solution for users who need structured data from any website.

Diffbot Key Features:

AI and Machine Learning: Diffbot uses AI to automatically recognize content types (e.g., articles, images, products) and extract data accordingly.

Customizable Data Extraction: Users can customize the AI model to focus on specific content types or data points that matter most to their projects.

API Access: Diffbot provides an API that allows users to integrate its web scraping functionality into their existing workflows.

Diffbot Use Cases:

- Enterprises needing to collect structured data from a wide range of websites without manually setting up scraping rules.

- Users who want to leverage AI for web scraping and data extraction tasks.

ScrapeHero

ScrapeHero offers a fully managed web scraping service that specializes in data extraction from websites that use advanced techniques to block scrapers, such as CAPTCHAs and dynamic content. ScrapeHero provides both scraping infrastructure and managed services for businesses that need high-quality, reliable data.

ScrapeHero Key Features:

Full-Service Scraping: ScrapeHero offers both scraping infrastructure and managed services, handling all aspects of web scraping for businesses.

Data Cleaning and Structuring: The tool includes data cleaning and structuring features to ensure that the extracted data is in the desired format for analysis.

Scalability: ScrapeHero is designed to handle large-scale scraping operations with the capacity to manage millions of records.

ScrapeHero Use Cases:

- Businesses looking for a fully managed web scraping service to handle all aspects of data extraction and delivery.

- Users who need high-quality, structured data from complex websites.

Import.io

Import.io is a versatile web scraping tool designed to turn websites into structured data APIs. It offers both a no-code interface for non-technical users and an API for developers looking for a more flexible and programmable solution. Import.io’s automated scraping process ensures that the data is consistently up to date, making it a popular choice for businesses requiring ongoing data feeds.

Import.io Key Features:

No-Code Platform: Import.io allows users to create data extraction workflows without any programming knowledge, making it accessible to non-technical users.

Data API: For developers, Import.io provides an API that allows seamless integration into existing systems for programmatic access to scraped data.

Scheduling and Automation: Import.io supports automated scraping at regular intervals, ensuring users receive fresh data on a recurring basis.

Import.io Use Cases:

- Non-technical users who need an easy-to-use, no-code solution for data extraction.

- Businesses that require an API-based solution for integrating web data into their existing systems.

Bright Data

Bright Data, previously known as Luminati, is one of the most robust web scraping solutions on the market today. It offers a large-scale proxy network and data collection platform that allows users to scrape data without fear of being blocked. Bright Data provides powerful tools to automate data extraction while also enabling users to build their own scraping infrastructure.

Bright Data Key Features:

Massive Proxy Network: Bright Data has one of the largest and most reliable proxy networks in the industry, allowing users to bypass IP blocks and geo-restrictions easily.

Customizable Data Collection: Users can set up and manage their scraping projects with high customization, choosing the proxies and scraping methods that work best for their needs.

API and Integration: Bright Data provides an API and supports easy integration with third-party tools, making it ideal for enterprises with complex scraping needs.

Bright Data Use Cases:

- Enterprises requiring high-volume, reliable data extraction across multiple geographies.

- Businesses dealing with websites that use advanced anti-scraping technologies.

Apify

Apify is a powerful platform for automating data extraction, web scraping, and web crawling. Apify offers both a visual interface and an API, making it suitable for a wide range of users, from beginners to advanced developers. Apify also supports cloud execution, allowing users to scale their scraping operations easily.

Apify Key Features:

Visual Interface for Beginners: Apify provides an easy-to-use, drag-and-drop interface for non-technical users who want to set up their scraping tasks without coding.

Advanced Automation and Workflow: Apify supports task automation, allowing users to schedule tasks, manage workflows, and scale scraping projects as needed.

Cloud Execution: Apify operates in the cloud, enabling seamless scaling of scraping operations for large data extraction needs.

Apify Use Cases:

- Developers and businesses that need an automated solution for scraping large amounts of data from multiple sources.

- Non-technical users who need a visual platform to handle their web scraping needs.

WebScraper.io

WebScraper.io is an easy-to-use web scraping tool that allows users to create scraping agents to extract data from websites. Its point-and-click interface makes it a great option for beginners, while its advanced features make it suitable for more complex tasks. WebScraper.io is available as both a browser extension and a cloud-based solution.

WebScraper.io Key Features:

Browser Extension: The browser extension allows users to quickly scrape data directly from websites without needing additional software or configuration.

Data Export: WebScraper.io enables users to export scraped data in multiple formats, such as CSV, JSON, and Excel, which can be easily integrated with other data processing tools.

Advanced Features: For more experienced users, WebScraper.io offers support for handling multiple pages, pagination, and even login forms for scraping protected content.

WebScraper.io Use Cases:

- Users who need a simple yet effective solution for scraping data directly from their browsers.

- Businesses that need to extract data from multiple websites with minimal setup.

Playwright

Playwright is an open-source web scraping and browser automation framework developed by Microsoft. It allows developers to automate browsers such as Chromium, Firefox, and WebKit for web scraping, testing, and web automation tasks. Playwright is designed for handling dynamic and complex websites, and its API offers great flexibility for developers.

Playwright Key Features:

Cross-Browser Automation: Playwright supports automation across multiple browsers, including Chrome, Firefox, and WebKit, which makes it versatile for scraping various types of websites.

Headless Mode: Playwright runs in headless mode, making it faster and more resource-efficient for scraping tasks.

Advanced Interaction: It allows users to interact with pages, fill forms, capture screenshots, and scrape data, even from websites with complex layouts or content rendered via JavaScript.

Playwright Use Cases:

- Developers who need a high-performance, flexible web scraping tool for complex websites.

- Projects that require advanced automation and interaction, such as scraping dynamic content or simulating user behavior.

Conclusion

Web scraping is a powerful tool that empowers businesses, researchers, and developers to collect valuable data from the web. The tools we’ve explored offer a wide range of features designed to cater to different needs, whether you’re looking for a simple no code solution or a robust, developer-centric tool. From handling JavaScript heavy websites to automating data extraction and overcoming anti-scraping measures, these tools provide everything you need to scrape data with efficiency and precision.

When choosing the right web scraping tool for your project, consider factors such as the complexity of the target websites, the volume of data you need, your technical expertise, and your desired level of automation. By selecting the appropriate tool, you can streamline your data collection process, gather actionable insights making scraping tools must have for data needs.